|

|

|

|

|

|

|

|

|

|

|

Abstract

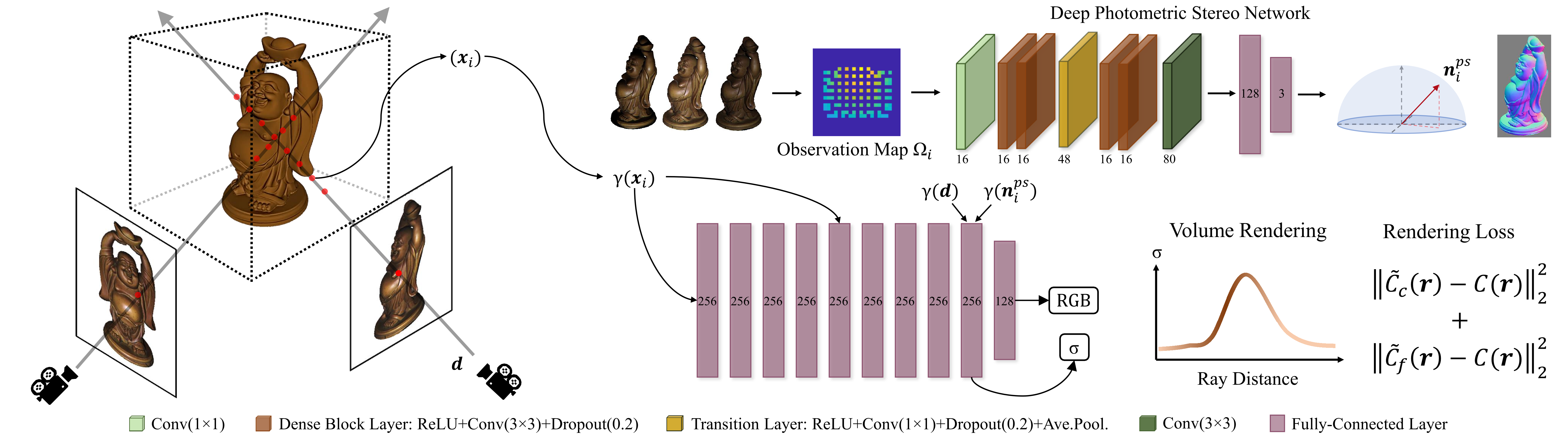

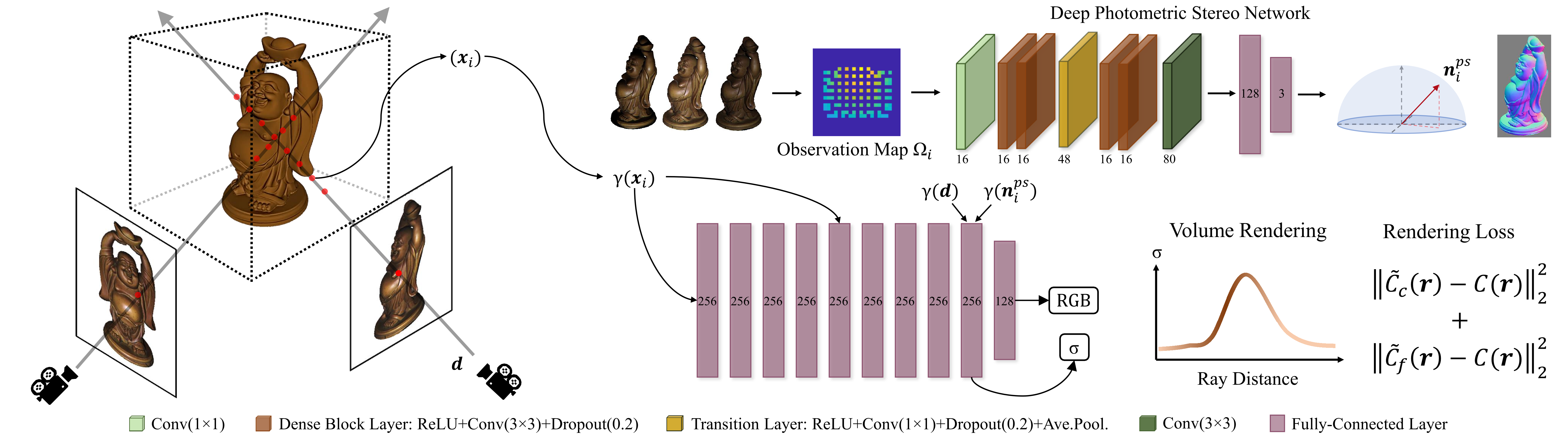

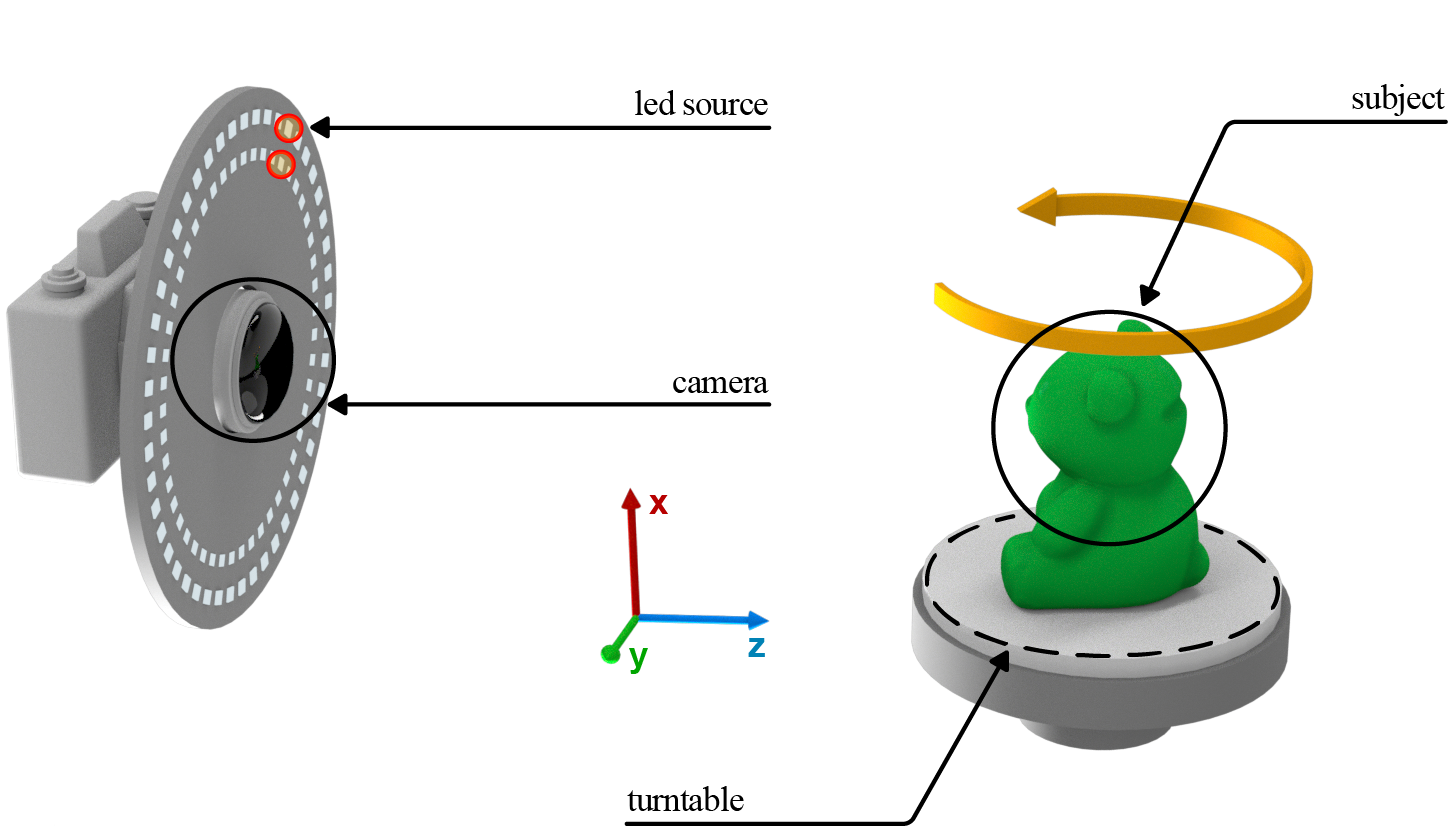

We present a modern solution to the multi-view photometric stereo problem (MVPS). Our work suitably exploits the image formation model in a MVPS experimental setup to recover the dense 3D reconstruction of an object from images. We procure the surface orientation using a photometric stereo (PS) image formation model and blend it with a multi-view neural radiance field representation to recover the object's surface geometry. Contrary to the previous multi-staged framework to MVPS, where the position, iso-depth contours, or orientation measurements are estimated independently and then fused later, our method is simple to implement and realize. Our method performs neural rendering of multi-view images while utilizing surface normals estimated by a deep photometric stereo network. We render the MVPS images by considering the object's surface normals for each 3D sample point along the viewing direction rather than explicitly using the density gradient in the volume space via 3D occupancy information. We optimize the proposed neural radiance field representation for the MVPS setup efficiently using a fully connected deep network to recover the 3D geometry of an object. Extensive evaluation on the DiLiGenT-MV benchmark dataset shows that our method performs better than the approaches that perform only PS or only multi-view stereo (MVS) and provides comparable results against the state-of-the-art multi-stage fusion methods.

|

Neural Radiance Fields Approach to Deep Multi-View Photometric Stereo Berk Kaya, Suryansh Kumar, Francesco Sarno, Vittorio Ferrari, Luc Van Gool. IEEE/CVF WACV 2022, Waikoloa, Hawaii, USA. |

|

|

|

|

|

|

|

|

|

|

|

|

|

This work was funded by Focused Research Award from Google(CVL, ETH 2019-HE-318, 2019-HE-323). Suryansh Kumar's project is supported by "ETH Zurich Foundation and Google, Project Number: 2019-HE-323" for bringing together best academic and industrial research. |